基于hadoop集群的hive 安装及异常解决方法

第一部分:软件准备与环境规划

Hadoop环境介绍

?Hadoop安装路径

?/usr/local/hadoop

Hive环境规划

?Hive安装路径

?/usr/local/hive

?Hive数据存放路径

?hdfs

?/user/hive/warehouse

?Hive元数据

?第三方数据库

?derby mysql

软件准备

?OS

?ubuntu

?JDK

?java 1.6.0_27

?Hadoop

?hadoop-1.0.0.tar

?Hive

?hive-0.8.1.tar

?

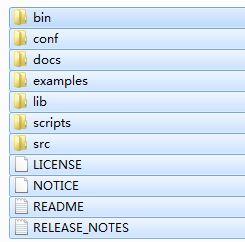

第二部分:Hive项目介绍 项目结构

?

Hive配置文件介绍?

?hive-site.xml????? hive的配置文件?hive-env.sh??????? hive的运行环境文件 ?hive-default.xml.template? 默认模板 ?hive-env.sh.template???? hive-env.sh默认配置 ?hive-exec-log4j.properties.template?? exec默认配置 ??hive-log4j.properties.template log默认配置 hive-env.sh <property> <name>javax.jdo.option.ConnectionURL</name> <value>jdbc:mysql://localhost:3306/hive?createDatabaseIfNotExist=true</value> <description>JDBC connect string for a JDBC metastore</description> </property> <property> <name>javax.jdo.option.ConnectionDriverName</name> <value>com.mysql.jdbc.Driver</value> <description>Driver class name for a JDBC metastore</description> </property> <property> <name>javax.jdo.option.ConnectionUserName</name> <value>hive</value> <description>username to use against metastore database</description> </property> <property> <name>javax.jdo.option.ConnectionPassword</name> <value>hive</value> <description>password to use against metastore database</description> </property>?页

4)把MySQL的JDBC驱动包(我使用的是mysql-connector-java-5.0.8-bin.jar,从http://downloads.mysql.com/archives/mysql-connector-java-5.0/mysql-connector-java-5.0.8.tar.gz下载并解压后可以找到)复制到Hive的lib目录下。

启动Hive shell,执行

show tables;

如果不报错,表明基于独立元数据库的Hive已经安装成功了。

查看一下元数据的效果。

在Hive上建立数据表:

CREATE TABLE my(id INT,name string) ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t';

show tables;

select name from my;

然后我们以刚刚建立的hive帐号登录MySQL查看元数据信息。

mysql> use hive

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

mysql> show tables;

+-----------------+

| Tables_in_hive? |

+-----------------+

| BUCKETING_COLS? |

| COLUMNS???????? |

| DATABASE_PARAMS |

| DBS???????????? |

| PARTITION_KEYS? |

| SDS???????????? |

| SD_PARAMS?????? |

| SEQUENCE_TABLE? |

| SERDES????????? |

| SERDE_PARAMS??? |

| SORT_COLS?????? |

| TABLE_PARAMS??? |

| TBLS??????????? |

+-----------------+

13 rows in set (0.00 sec)

mysql> select * from TBLS;

+--------+-------------+-------+------------------+--------+-----------+-------+----------+---------------+--------------------+--------------------+

| TBL_ID | CREATE_TIME | DB_ID | LAST_ACCESS_TIME | OWNER? | RETENTION | SD_ID | TBL_NAME | TBL_TYPE????? | VIEW_EXPANDED_TEXT | VIEW_ORIGINAL_TEXT |

+--------+-------------+-------+------------------+--------+-----------+-------+----------+---------------+--------------------+--------------------+

|????? 1 |? 1319445990 |???? 1 |??????????????? 0 | hadoop |???????? 0 |???? 1 | my?????? | MANAGED_TABLE | NULL?????????????? | NULL?????????????? |

+--------+-------------+-------+------------------+--------+-----------+-------+----------+---------------+--------------------+--------------------+

1 row in set (0.00 sec)

在TBLS中可以看到Hive表的元数据。

异常描述:

hive> show tables;

FAILED: Error in metadata: java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.metastore.HiveMetaStoreClient

FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTask

解决方法:

??? 引起这种异常的可能性较多,这里只是列举了一些相关的注意事项以供参考,详细大家可以通过网络找更多相关的内容.

??? 1? 删除? $HADOOP_HOME/build 目录(如果当时通过下载gz包进行编译安装的话,会残留部分信息,删除这个目录是必须的)

??? 2? ./hive -hiveconf hive.root.logger=DEBUG,console? 进行调试模式,根据问题逐步解决

??? 3? 确认 $HIVE_HOME/lib 下存在 mysql-connector-java-5.1.25-bin.jar? hadoop-core-1.2.0.jar 主包

??? 4? 了解Hive metastore三种存储方式,确认自身配置的模式是否正确

异常描述:

java.sql.SQLException: Invalid authorization specification,? message from server: "Access denied for user 'root'@'localhost' (using password: YES)"

java.sql.SQLException: Syntax error or access violation,? message from server: "Access denied for user 'hadoop'@'localhost' to database 'metastore_db'"

解决方法:

这是因为mysql远程访问的权限问题,执行 grant all on *.*? to 'hadoop'@'%' identified by 'hadoop'; 即可。

INSERT INTO USER(HOST,USER,PASSWORD) VALUES("localhost","hadoop",PASSWORD("hadoop"));

GRANT ALL PRIVILEGES ON * TO 'hadoop'@'localhost' IDENTIFIED BY 'hadoop' WITH GRANT OPTION;

FLUSH PRIVILEGES;

?

异常描述:

java.sql.SQLException: Syntax error or access violation,? message from server: "Specified key was too long; max key length is 767 bytes"

解决方法:

一般是由于mysql的最大索引长度导致,看了一个人的文章颇有感触下面给出链接:

http://blog.csdn.net/cindy9902/article/details/6215769

1、数据库的字符集除了system为utf8,其他最好为latin1,否则可能出现如上异常。

2、set global binlog_format='MIXED';READ-COMMITTED需要把bin-log以mixed方式来记录

改完上述两种方法后,我还是会出现以上问题,我把mysql的metastore_db里面的所有表删除,hadoop重启,然后重新启动hive之后就能够正常删除表了。

问题解决。